Scraping dynamic websites—sites that change their content based on user interaction or real-time updates—poses unique challenges compared to static pages. Despite these obstacles, with the right tools and strategies, it's entirely feasible to extract valuable data for various purposes, such as market research or competitive analysis. Here's a brief overview to get you started:

- Dynamic websites offer personalized content, real-time updates, and interactive elements, pulling data from databases using server-side languages.

- Importance: For businesses, staying updated with the latest data from dynamic websites is crucial for making informed decisions.

- Tools: Selenium, BeautifulSoup, Scrapy, Pyppeteer, and Playwright are essential for simulating user interactions and scraping dynamic content.

- Challenges: Infinite scrolling and AJAX updates require specific strategies to ensure comprehensive data collection.

- Best Practices: Adhering to ethical scraping guidelines, such as respecting robots.txt and using proxy rotation, is vital for sustainability.

To scrape dynamic websites effectively, understanding both the technical aspects of these sites and the ethical considerations of web scraping is essential. Tools like Selenium and Playwright, paired with smart strategies, can help overcome the hurdles of dynamic data extraction.

Key Characteristics

- Personalized content: These websites show you stuff based on what you like or do on the site. Think of getting product suggestions while shopping online.

- Real-time updates: Things on the site change as they happen. Like when new posts pop up on your social media without hitting refresh.

- Interactivity: You can click, comment, or vote on things, and the site will react right away.

- Database integration: The site pulls fresh info from databases, so what you see is always up to date.

- Dependence on server-side languages: These sites are powered by special coding languages (like PHP, ASP.NET, Node.js) that work behind the scenes.

Examples of Dynamic Websites

Here are a few places you might have seen dynamic websites in action:

- YouTube: Your video recommendations change based on what you've watched before. Comments and views update without needing to reload the page.

- Twitter: New tweets show up instantly, and what's trending updates based on what everyone's talking about.

- Facebook: Your feed shows posts, ads, and suggestions just for you. You see friends' updates right away.

- Wikipedia: Articles get updated all the time by different people. You can see the latest changes as they happen.

- CNN.com: The site shows breaking news and updates as stories develop, without you having to refresh the page.

These sites are smart because they use databases and special coding to give everyone a unique and lively experience by showing fresh and personalized content.

Importance of Scraping Dynamic Content

Today, more websites are dynamic, which means they change based on what you're looking for or doing. This shift has made it really important for different professionals, like market researchers or recruiters, to be good at grabbing info from these websites. They need up-to-date data for making smart business choices, but getting this data is not straightforward because these websites keep changing.

Rising Demand from Businesses

Businesses need fresh info from the web for all sorts of reasons. For example, online stores change their product prices and stock levels all the time. Keeping track of this helps companies stay competitive. Job sites update listings constantly, so recruiters need to grab this data quickly to find potential candidates. Other uses include checking out social media for what people think about something, looking at real estate prices, or getting news stories for research.

But, the tools that used to work for simple websites don't do the job anymore. We need better tools that can handle websites that keep updating themselves.

The Scraping Challenge

Scraping dynamic websites is harder than it sounds. These websites don't just show all their info in a simple way that you can grab easily. They use a lot of JavaScript, which means the scraper has to pretend to be a browser to see everything. Also, these websites often try to block scrapers by using things like CAPTCHAs or by limiting how much data you can look at.

To get around these problems, you need:

- Headless browsers (like Puppeteer and Playwright) that can load websites just like a regular browser but without showing anything on screen.

- Proxies and residential IPs to hide the scraper's activities.

- Scraping frameworks (like Scrapy) that make it easier to get the data you need.

- Cloud infrastructure to run many scraping tasks at the same time.

Getting the setup right is key to scraping successfully.

Scraping Solutions

Luckily, there are ways to scrape dynamic websites well. Some services take care of the hard parts for you, so you can just focus on using the data. Sometimes, companies hire people to make custom scrapers for their specific needs.

If you're doing it yourself, tools like Selenium, Puppeteer, and Playwright are really helpful. They let you control the scraping process with some coding knowledge. This way, you can get the data from websites that change a lot, like social media or online stores.

In short, scraping dynamic websites takes more effort than grabbing data from simpler sites. But with the right tools and some smart setup, you can get the valuable info businesses need.

Tools for Scraping Dynamic Websites

When you want to collect information from websites that change a lot, you need special tools. Here's a simple breakdown of what you can use:

Selenium

- Think of it as a robot that can use a web browser just like you. It can click around and see things on pages that change.

- It's a bit slow but very thorough since it waits for everything on the page to load.

BeautifulSoup

- This is a tool for picking out specific bits of information from a web page. It's great for pulling out text or links.

- It can't load pages by itself, so you often use it with Selenium to get the full picture.

Scrapy

- This is for grabbing lots of data from many pages at once. It's fast and efficient but doesn't handle changing content on its own.

- You might need to add something else to make it work for dynamic sites.

pyppeteer

- A tool similar to Puppeteer but for Python. It can do everything a web browser can, including dealing with pages that change.

- It doesn't automatically switch between different internet connections to hide its tracks.

Playwright

- It's like a newer, shinier version of Puppeteer and Selenium. It works with different web browsers and can handle changing pages.

- You can set it up to use different internet connections, but it takes a bit of extra work.

| Tool | Headless Browsing | JavaScript Support | Proxy Rotation |

|---|---|---|---|

| Selenium | ✅ | ✅ | ❌ |

| BeautifulSoup | ❌ | ❌ | ❌ |

| Scrapy | ✅ | ❌ | ❌ |

| pyppeteer | ✅ | ✅ | ❌ |

| Playwright | ✅ | ✅ | ❌ |

This table shows you quickly which tools can browse without showing a window (headless), which can deal with JavaScript (which makes pages dynamic), and which can change their internet connection to avoid detection. Selenium is a popular choice because it's good at pretending to be a real user. But, depending on what you need, you might mix and match these tools to get the best results.

Scraping Dynamic Websites in Python

Scraping dynamic websites with Python might sound tough, but it's really doable with the right steps. Here's a simple guide to help you scrape a website that changes its content:

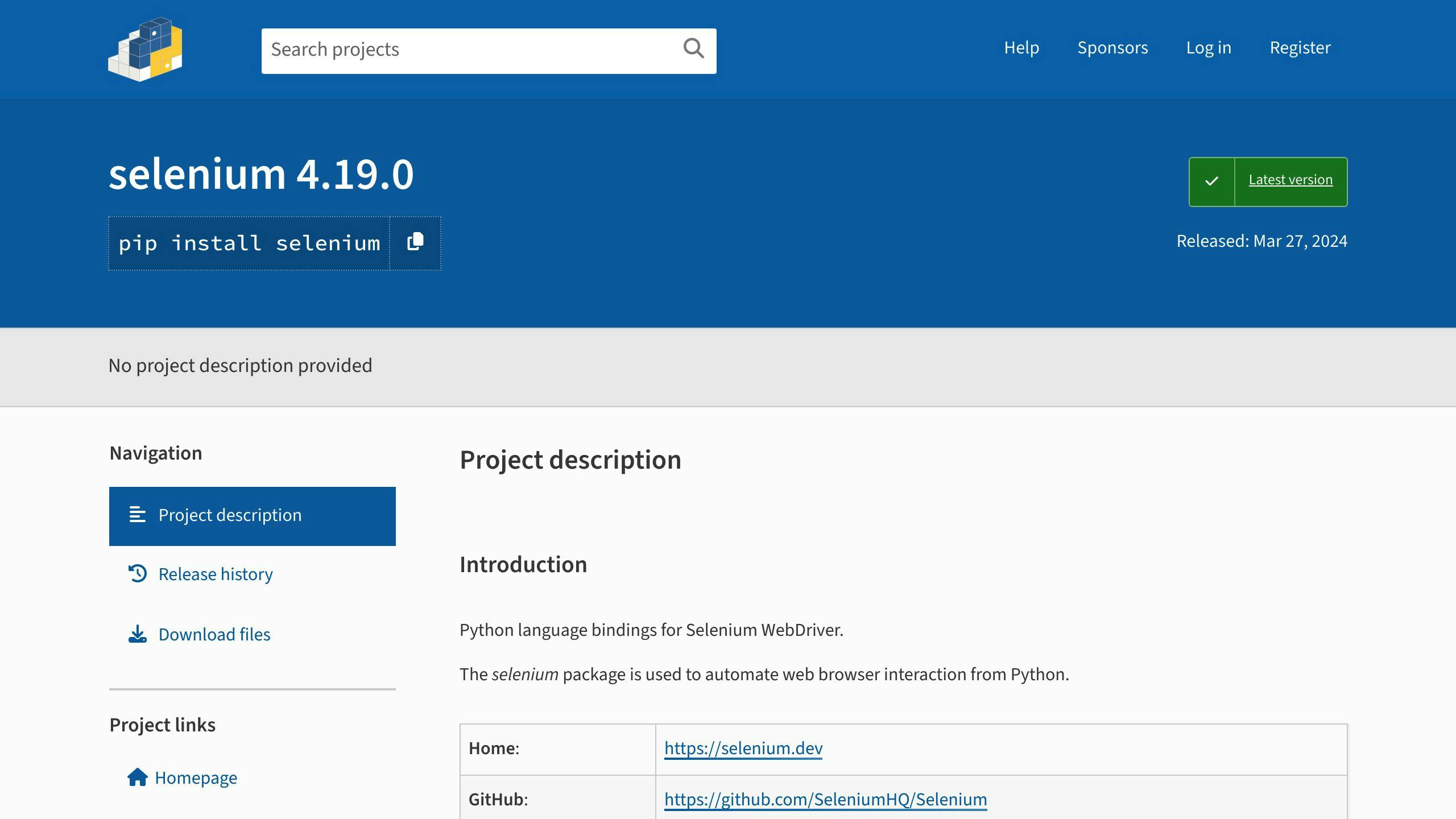

1. Install Selenium

First, you need Selenium. It's a tool that lets you tell a web browser what to do, just like you would. To get it, type this in your command line:

pip install selenium

Don't forget to download the WebDriver for the browser you're using (like Chrome or Firefox).

2. Import Selenium

from selenium import webdriver

3. Initialize the WebDriver

This step opens up the browser.

driver = webdriver.Chrome()

4. Navigate to the target URL

driver.get("https://dynamicwebsite.com")

5. Interact with the page

Now, you tell the browser to do things on the site, like clicking buttons or typing in a search box. This is how you make the site show its dynamic content.

search_box = driver.find_element_by_id("search")

search_box.send_keys("shoes")

search_box.submit()

6. Parse the page source

After you've made the site show what you want, you can look at the page's code with Selenium.

page_source = driver.page_source

7. Extract data

Next, use Beautiful Soup to pick out the info you need from the page's code.

from bs4 import BeautifulSoup

soup = BeautifulSoup(page_source, 'html.parser')

prices = soup.find_all("span", {"class": "price"})

8. Store and export data

Finally, save the info you got into a file or a database.

That's it! With some practice, using Selenium to scrape websites that change their content can become a handy skill.

sbb-itb-9b46b3f

Common Challenges

Scraping dynamic websites can be tricky. Here are a few common problems and how to solve them.

Infinite Scroll

Many websites today load more content as you scroll down, so you don't have to keep clicking "Next Page."

But for scrapers, this can be tricky because they might not keep scrolling to get all the info. Here's how to handle it:

- Make sure to scroll a bit, wait for the new stuff to show up, and then scrape. Do this step by step.

- If there's a "Load More" button, click it.

- Look for URLs that have page numbers in them and go through each one.

- Use a bit of JavaScript to make the page scroll down automatically.

With some careful steps, scrapers can grab all the info from pages that keep loading more content.

AJAX Page Updates

Websites often use something called AJAX to update parts of the page without reloading everything. This means scrapers might not notice these updates.

Here's what you can do:

- Wait a bit longer after doing something on the page, so all the AJAX content has time to show up.

- Keep an eye out for signs that the page is loading new stuff, like spinning icons.

- Tools like Selenium or Puppeteer can run JavaScript for you, making sure you see the updated content.

- Regularly check if the page has changed to catch any new updates.

It's a bit more work, but with these adjustments, scrapers can catch content that loads in bits and pieces.

Best Practices

When you're scraping websites that constantly change, like online stores or social media, you need to be smart and respectful about it. Here are some straightforward tips to do it right:

Respect Robots.txt

Websites have a file called robots.txt that tells you what's okay to scrape. Ignoring this can get you blocked, so always check it first.

Set Reasonable Crawl Delays

Don't rush and send too many requests to a website all at once. This might make them think you're up to no good. Instead, wait a bit between each request, like 5-10 seconds, to act more like a regular visitor.

Handle CAPTCHAs

Sometimes, websites will ask if you're a robot by showing you a CAPTCHA. You can use services like 2CAPTCHA that solve these for you, so you don't get stuck.

Use Proxy Rotation

If a website sees too much activity from one internet address, it might block you. Using different proxies (kind of like wearing disguises) helps avoid this problem.

Randomize User Agents

Switch up your user agent, which tells the website what kind of device or browser you're using. This makes you blend in better.

Catch Errors Gracefully

Things don't always go smoothly. Your scraper might run into problems like losing connection or not finding what it's looking for. Make sure your code can handle these bumps without crashing.

Scrape Data Responsibly

Only take the information you really need and don't overdo it. Also, don't sell the data without permission. Being respectful and ethical is key.

By sticking to these pointers, you can collect data from dynamic sites more effectively and avoid getting into trouble. Remember, the goal is to be smart about how you scrape, using the right tools like Selenium, Puppeteer, or Playwright, and always being considerate of the websites you visit.

Conclusion

Scraping websites that keep changing is a bit different from the usual way we collect data from the web, but it lets us get our hands on fresh, valuable information. Here's what you should remember:

- Websites that update their content automatically are called dynamic. They can show you new, personalized info because of special tech like JavaScript and databases.

- Nowadays, lots of businesses really need to gather data from these sites to make smart choices. But, scraping these sites can be tough because they're always changing and they might try to block scrapers.

- Tools like Selenium and Playwright are like invisible users that can open web pages and interact with them to get to the changing content. Using different internet connections (proxies) and certain tools can also help a lot.

- You might run into issues like pages that keep loading more content as you scroll or parts of the page that update on their own. But with a few tweaks, you can make sure you're catching everything.

- It's important to play nice when scraping: follow the site's rules, don't overwhelm the site with too many requests, solve CAPTCHAs if they pop up, use different proxies to avoid getting blocked, switch up your user agent to stay under the radar, and handle any errors smoothly.

- Only take the data you need and don't misuse it. Being respectful and ethical is key.

In the end, while scraping dynamic sites asks for a bit more effort, the benefits of getting up-to-date, specific data are huge. With the tips we've talked about, you're ready to start pulling useful info from even the trickiest sites.

Related Questions

How to do web scraping on dynamic website?

To scrape a dynamic webpage, you have two main options:

- Dive into the JavaScript on the site to find out where it creates the content you want. This means you'll need to understand a bit about how JavaScript works and where to look.

- Use tools like Selenium in Python that can pretend to be a web browser. This way, they let the website load fully, including all the dynamic parts, before you start scraping.

How do I get dynamic data from a website?

Here's how to grab dynamic data from websites:

- Look at the website's network traffic using your browser's developer tools. This can show you where the website gets its changing data from.

- Use the browser's console to check out JavaScript that loads new stuff. You might be able to use these same functions to get data.

- Use Selenium to make sure the website is fully loaded and all the dynamic content is there before you scrape.

- If you notice patterns in how the website's URLs change, you might be able to tweak these to get different data.

How do I make my website dynamic from scratch?

To build a dynamic website from the ground up:

- Pick a server-side language like PHP, Python, or Node.js.

- Get a web server running.

- Connect your code to a database like MySQL to store your data.

- Consider using a web framework like Django (for Python) to help serve your web pages.

- Write code that asks the database for information, which will be shown on your web pages.

- Use a template language to easily display this dynamic content on your pages.

By updating the database, the content on your website can change automatically.

How to scrape dynamic websites with R?

To scrape dynamic websites using R, follow these steps:

- Install R packages

rvestandRSelenium. - Use

RSeleniumto open a web browser session that loads the website completely, including any JavaScript content. - Use

rvestto parse and extract the data from the webpage source code. - Find the data you need by using CSS selectors.

- Pull this data into R for analysis, cleaning it up and organizing it as needed.

- You can then save your cleaned data to a file, like a CSV, for further use.

Using RSelenium is key because it ensures that you're seeing the website just like a human would, with all the dynamic content fully loaded.